I'm a computational scientist who builds and deploys production-grade machine learning systems end-to-end. My work sits at the intersection of quantitative finance and modern ML — taking raw, messy market data and transforming it into strategies that run autonomously in the cloud. I hold a PhD from the University of Texas at Austin, where I developed a deep foundation in numerical methods, stochastic modeling, and high-performance computing. That academic rigor now powers everything from time-series embedding models and regime detection to multi-objective portfolio optimization and reinforcement learning.

Over the past three years, I've shipped automated trading systems, NLP-powered analytics platforms, and real-time dashboards — always with an emphasis on clean infrastructure, rigorous backtesting, and production reliability. I care about the full lifecycle: feature engineering, model development, optimization, deployment, and monitoring.

My core focus was building ML-driven alpha signals and the infrastructure to trade on them. I engineered fast data pipelines using multi-index lookups and vectorized Pandas operations, then leveraged Amazon's Chronos foundation model to extract features from time-series embeddings. Feature scoring relied on out-of-sample AUC and SHAP explainability, with the Kneedle algorithm automating feature selection. For regime awareness, I deployed Hidden Markov Models calibrated via the Bayesian Information Criterion, allowing the system to adapt its behavior across different market environments.

On the optimization side, I generated Pareto fronts using NSGA-II multi-objective optimization with configurable trade-off rules, and implemented constrained nonlinear optimization (SLSQP) for stochastic volatility model calibration. I also developed a reinforcement learning-based portfolio optimizer using Conservative Q-Learning, and explored optimal signal subsets through genetic algorithms with tournament selection, crossover, and mutation. Bayesian optimization with Tree-structured Parzen Estimators handled signal discovery in high-dimensional search spaces.

The production stack ran on AWS: CloudFormation for infrastructure-as-code, GitHub Actions for CI/CD, Docker for packaging, and Lambda plus EventBridge for serverless orchestration. I deployed interday options trading powered by Chronos embeddings with S3-backed inference, intraday options trading driven by FFT-based anomaly detection, and a weekly regime-adaptive sector ETF rebalancing system with execution cost tracking. A React frontend backed by DynamoDB provided real-time position tracking and daily performance dashboards.

I also built an NLP analytics layer — a FastAPI service powered by Groq-hosted Llama and FAISS for RAG, serving natural-language explanations of US Treasury press releases. Routing logic was derived from UMAP and HDBSCAN corpus analysis via LangGraph, with LlamaGuard for semantic injection detection, Presidio for PII redaction, and Guardrails AI for structured output enforcement. Full observability was instrumented through LangSmith tracing and an offline evaluation suite.

I joined this early-stage startup during the critical integration phase of a robotic surgical navigation platform that combined medical imaging with robotic actuation. My work centered on implementing TCP/UDP communication protocols to control the robotic arm in real time. I also contributed to end-to-end product packaging using the Poetry dependency manager and deployment via Azure tooling. The culmination was a successful live system demonstration that supported the company's Series A fundraising.

Here I adapted physics-informed modeling — originally developed for fusion experiment data — to financial time series. The production pipeline consisted of sparse regression for PDE construction, automated signal generation, and execution. I implemented CAGR-maximizing Bayesian optimization using Tree-structured Parzen Estimators for swing trade threshold estimation. The entire system was deployed on AWS Lambda using CloudFormation/SAM, GitHub Actions, Docker, and EventBridge-based scheduling.

ML & Optimization

Deep experience across model training, feature engineering, and algorithmic optimization — from gradient-based methods to evolutionary search.

Cloud & MLOps

Serverless-first production systems with full CI/CD, infrastructure-as-code, and container orchestration on AWS.

Programming

Python-centric workflow with a focus on high-performance numerics and reactive frontends for data visualization.

APIs & Web

Production API design with real-time data feeds from brokerage platforms and robust request validation.

NLP & LLMs

End-to-end RAG pipelines with guardrails, observability, and semantic analysis for unstructured text at scale.

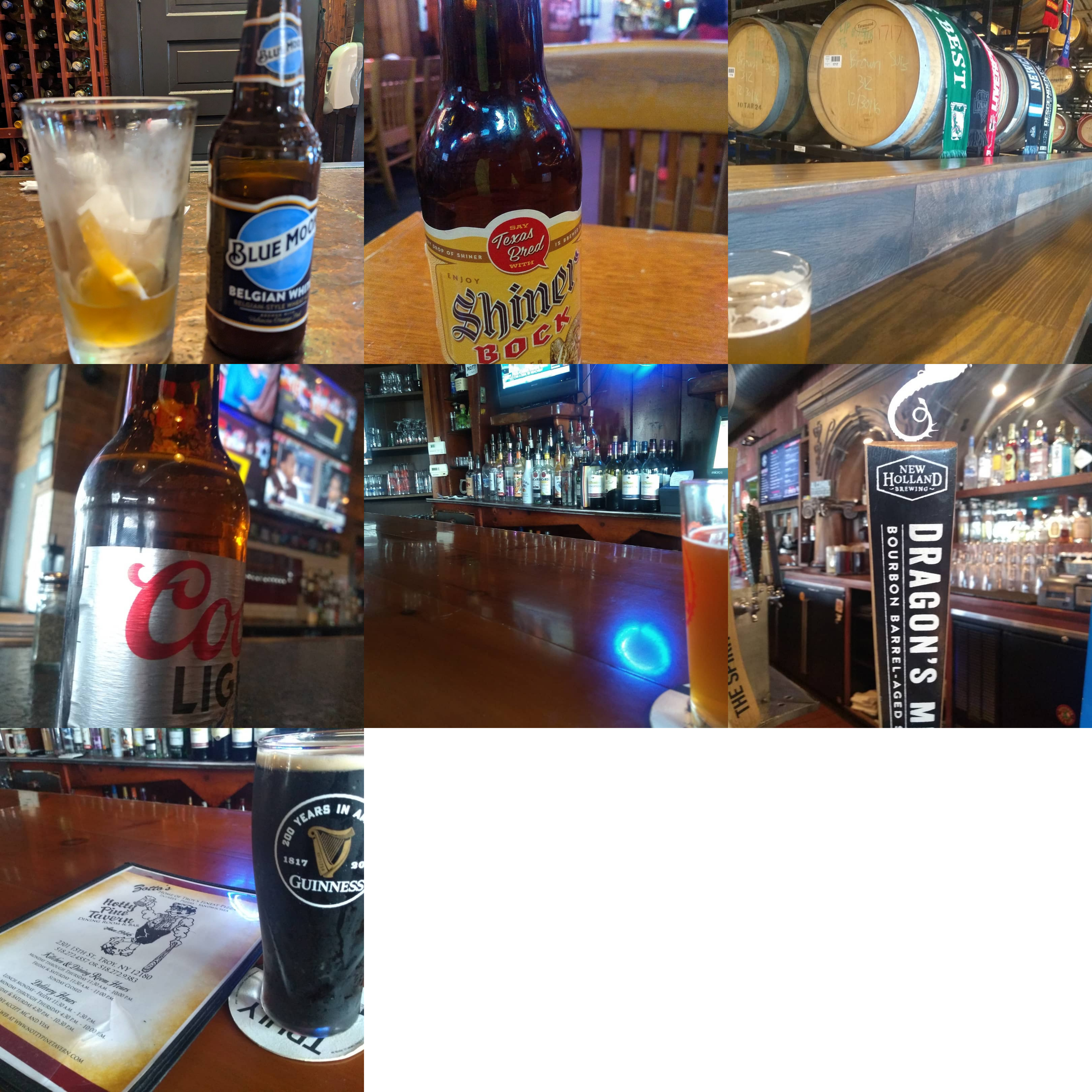

Beyond the code